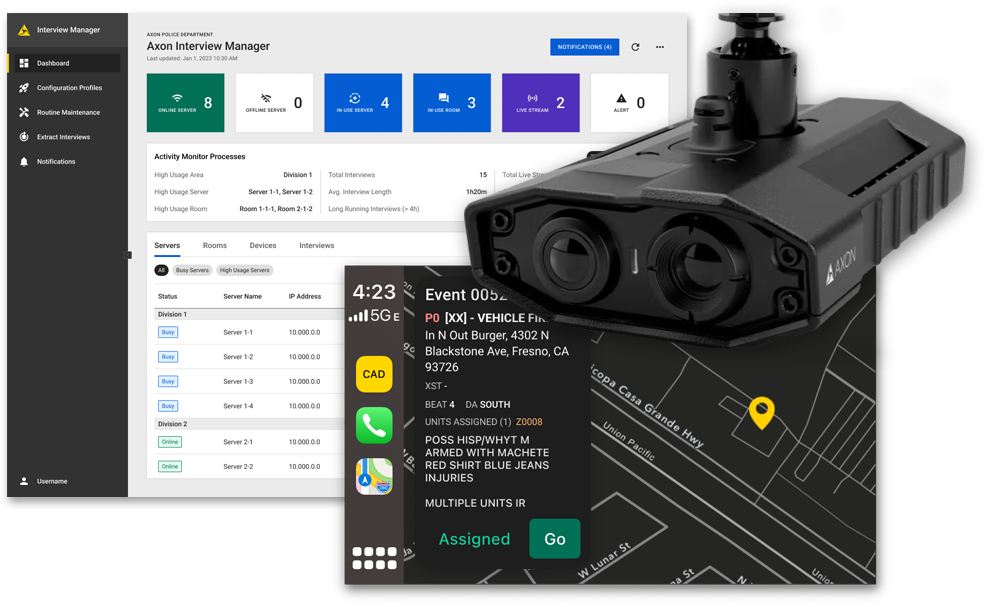

When I joined Axon in 2021, the product ecosystem had grown organically across devices and applications. Each implementation worked in isolation, but together they lacked a unified and seamless user experience.

Consistent customer feedback called out these functional inconsistencies, highlighting the urgent need for a cohesive experience across the platform. A key aspect of my work focused on building a principled design framework, measurable processes, and team alignment to deliver connected, reliable, and scalable experiences.

Design + Research Strategy and Planning

Design / Creative Direction

Cross-team Feature Planning

Team Lead / Mentoring and Management

4 UX Designers

1 UX Researcher

18 PM

56 Engineers

3 Years for multiple projects and implementations.

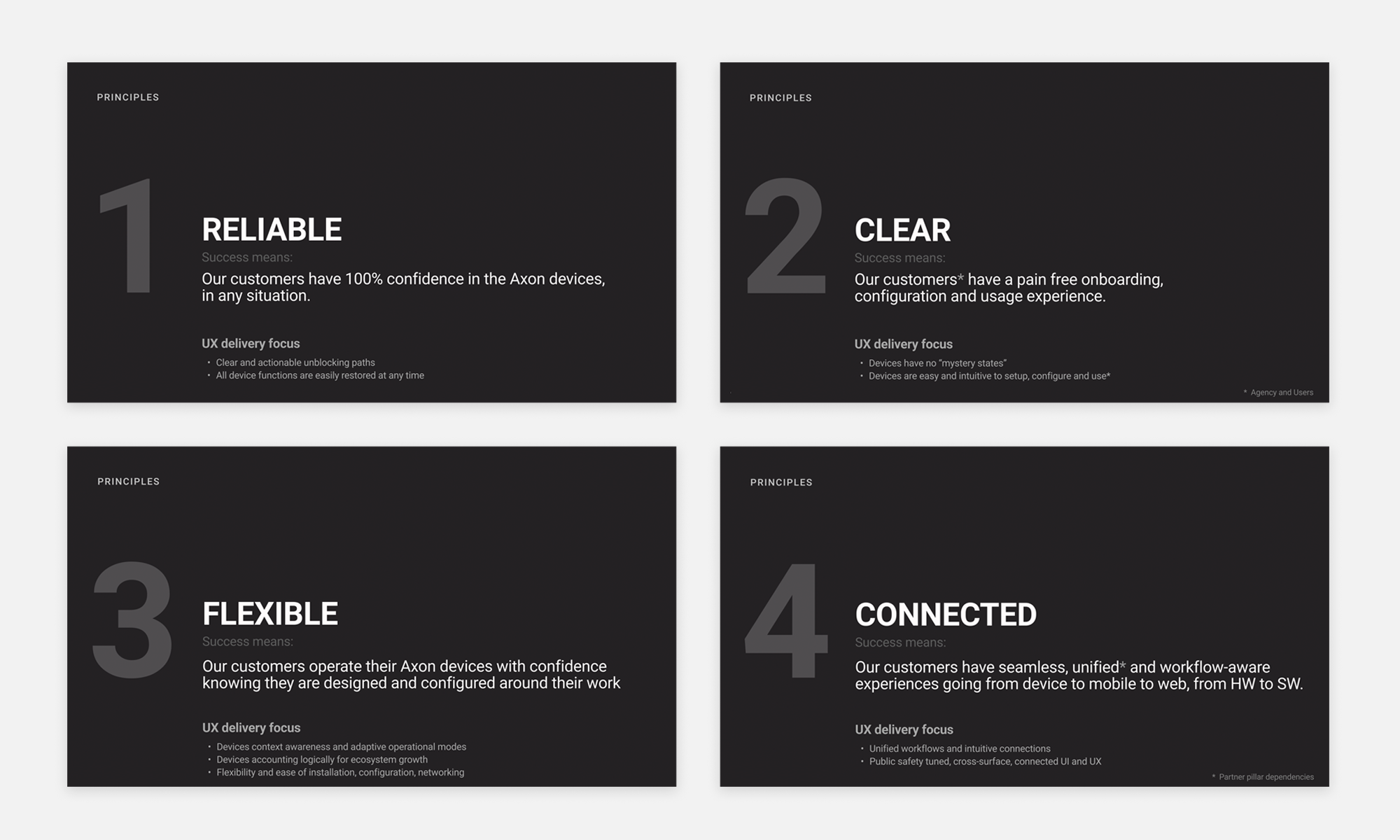

Defining the ApproachI began by developing a principle-driven design methodology. This approach became the foundation for research, production, and measurement, guiding how we crafted connected experiences for first responders.

Establishing Experience Design Principles and MetricsThrough product audits, user interviews, ride-alongs, and stakeholder discussions, I synthesized insights into core themes (➊). These informed experience design principles and customer promises, which also became the foundation for experience measurement.

Experience Design Principles (➋):

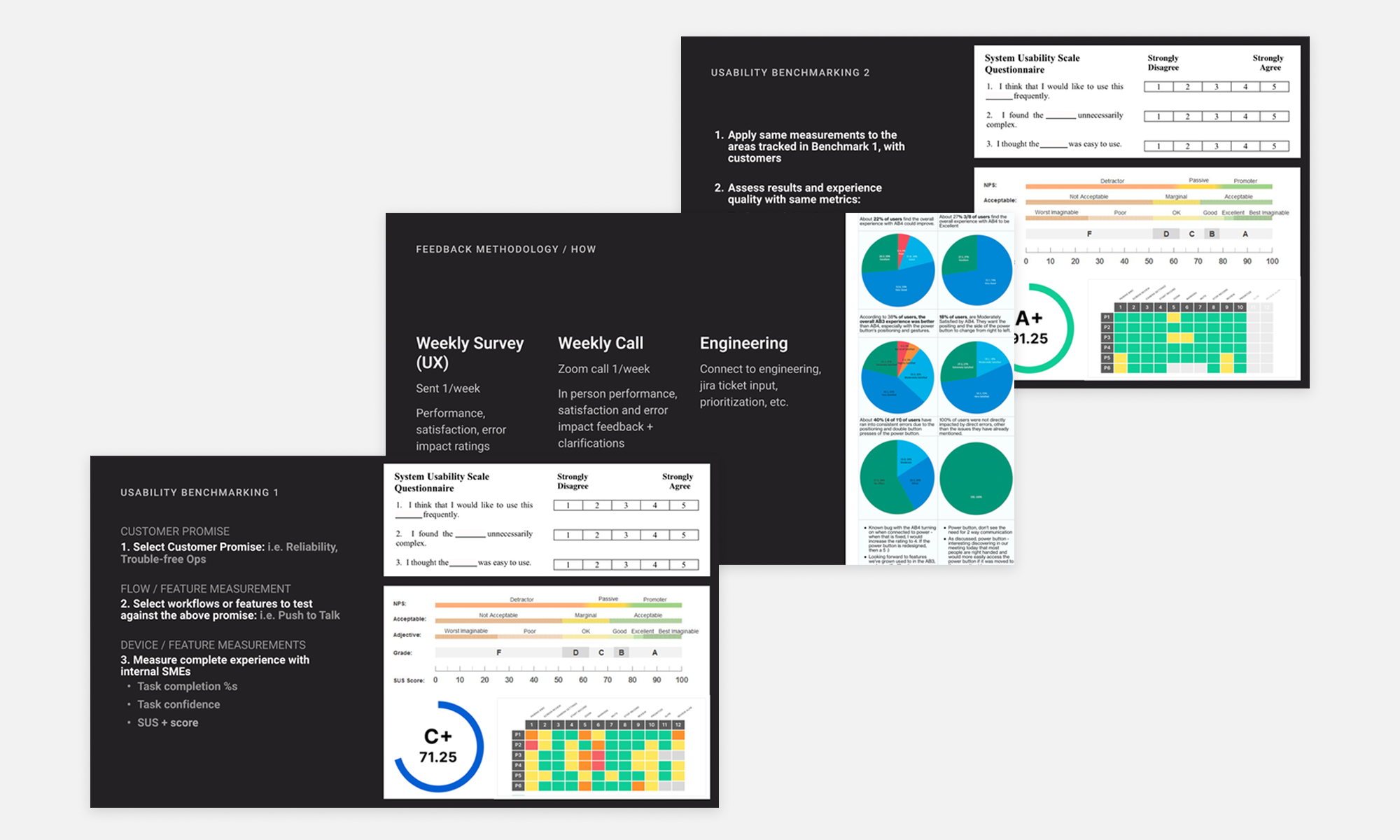

The Measurement ProcessTo ensure design quality and track progress, I introduced a measurement framework (➌) with structured checkpoints for feedback and validation.

This allowed us to make data-driven design decisions and continuously optimize the customer experience.

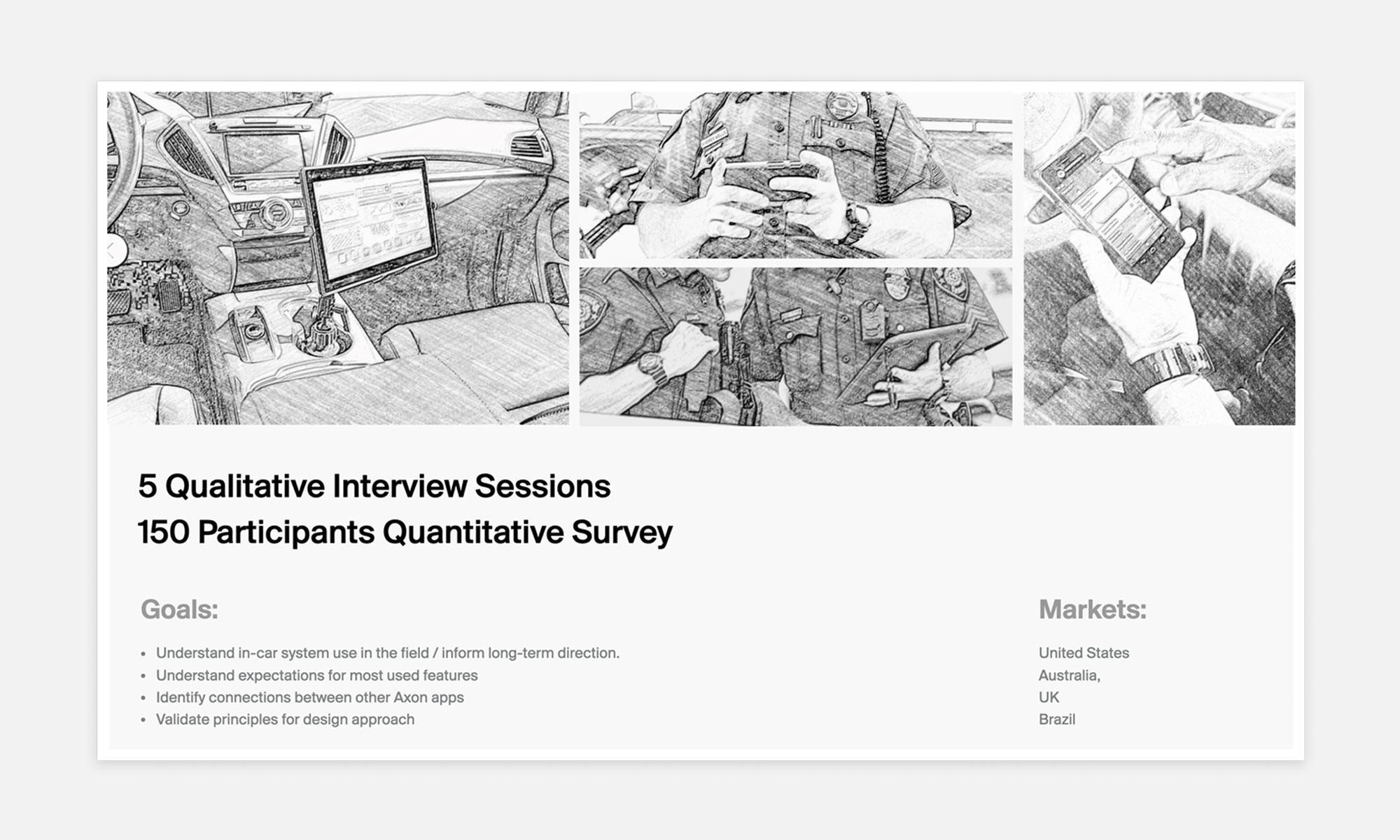

I first applied this framework to the Axon Fleet in-car camera system companion app, transitioning it from a Windows laptop tool to mobile-first experiences across iOS and Android phones and tablets for global markets.

After months of research (➍), design (➎), prototyping (➏), and development, supported by ongoing testing, the app reached beta readiness (➐).

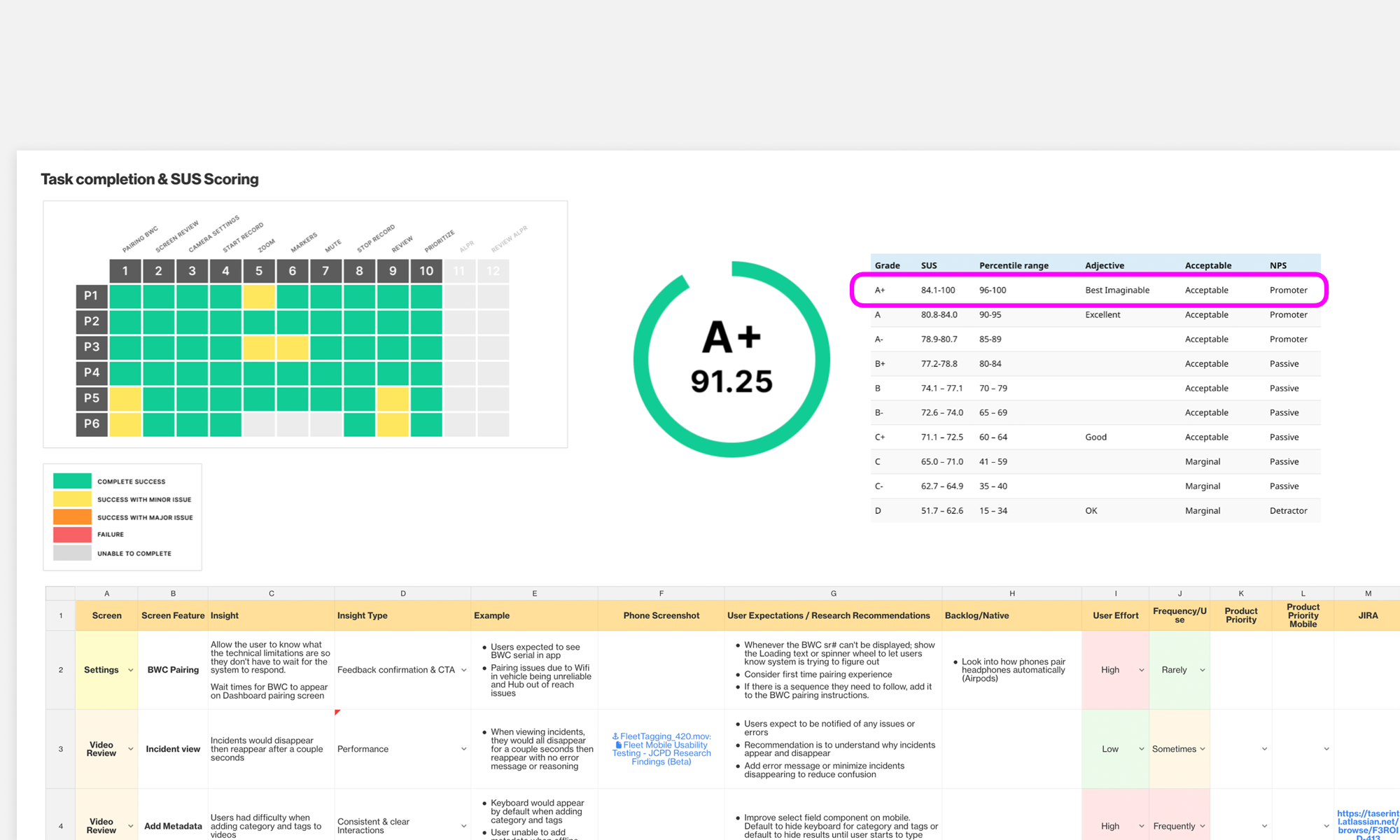

Once ready, we set out to apply the measurement steps with the following results:

TakeawaysThe structured, principled process gave the team confidence in release readiness while proving measurable improvements through each phase.

This approach, embedded in Axon’s product development, ensures well-designed and consistently evaluated features and products, incorporating user input at every stage and improving customer experience and planning along the way.

Features are well-designed and systematically evaluated.

User feedback is incorporated at every stage.

3% NPS increase for Fleet 3, 5% increase for AB4 (BodyWorn camera)